Filebeat uses its predefined module pipelines, when you configure it to ingest data directly to ElasticSearchīasically you have 2 choices – one to change existing module pipelines in order to fine-tune them, or to make new custom Filebeat module, where you can define your own pipeline.Actually it is already using them for all existing filebeat modules like: apache2, mysql, syslog, auditd …etc. Filebeat supports using Ingest Pipelines for pre-processing.

#PARSE APACHE LOGS FILEBEATS CODE#

Also I suppose that the code under this processors is also pretty the same. Most of the processors you have inside Logstash, are also accessible inside Ingest Pipelines (the most important one – grok filters).

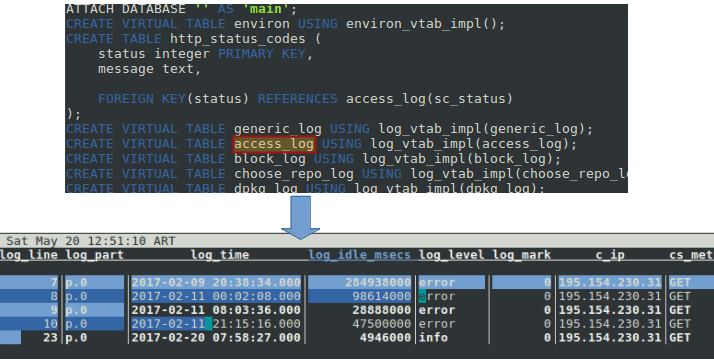

Ingest Pipelines are powerful tool that ElasticSearch gives you in order to pre-process your documents, during the Indexing process. What are ingest pipelines and why you need to know about them ? Escaping strings in pipeline definitions.Having syntax errors inside Filebeat pipeline definition.Having multiple Filebeat versions in your infrastructure.Updating filebeat after existing pipeline modifications.Creating pipeline on-the-fly and testing it.First, let’s take the current pipeline configuration.Troubleshooting or Creating Pipelines With Tests.Testing and Troubleshooting Pipelines inside Kibana (Dev Tools).Telling Filebeat to overwrite the existing pipelines.Modifying existing pipeline configuration files.They have most of the processors Logstash gives you.Some pros which make Ingest Pipelines better choice for pre-processing compared to Logstash.What are ingest pipelines and why you need to know about them ?.The Apache log format is the default Apache combined pattern ( "%h %l %u %t \"%r\" %>s %b \"% Parsing your particular log’s format is going to be the crux of the challenge, but hopefully I’ll cover the thought process in enough detail that parsing your logs will be easy. Kibana showing Apache and Tomcat responses for a 24 hour period (at a 5 minute granularity). The logging isn’t always the cleanest and there can be several conversion patterns in one log. Most of the apps I write compile to Java bytecode and use something like log4j for logging. My second goal with Logstash was to ship both Apache and Tomcat logs to Elasticsearch and inspect what’s happening across the entire system at a given point in time using Kibana. Once you’ve gotten a taste for the power of shipping logs with Logstash and analyzing them with Kibana, you’ve got to keep going. I have an updated example using the multiline codec with the same parsers in the new post. I have published a new post about other methods for getting logs into the ELK stack.Īdditionally, the multiline filter used in these examples is not threadsafe. Update: The version of Logstash used in the example is out of date, but the mechanics of the multiline plugin and grok parsing for multiple timestamps from Tomcat logs is still applicable. Logstash Multiline Tomcat and Apache Log Parsing

0 kommentar(er)

0 kommentar(er)